Deep Reinforcement Air Combat Learning – Assessment (DRACOLA)

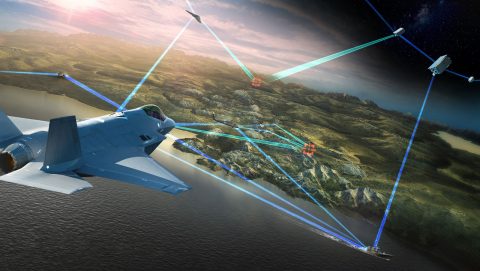

DRACOLA (Deep Reinforcement Air Combat Learning - Assessment) supports operational and combat assessment closing the loop between assessment, planning and execution. DRACOLA’s goal is to assist with the operational challenge: staying within the adversary’s observe, orient, decide, act (OODA) loop and creating overmatch depends on our ability to rapidly identify, assess, and adjust operations to a dynamic environment. Using Commander’s Guidance and Objectives, Joint Target Lists, Joint Integrated Prioritized Target List (JIPTL), Joint Tasking Order, and Battle Damage Assessment results, DRACOLA tracks current status of operations, makes recommendations on reattack and forecasts future days’ JIPTLs. DRACOLA also rolls-up daily assessments into overall campaign assessments to inform commander decision-making.

DRACOLA’s OBJECTIVES:

Decision quality on par with human and baseline approach

Execution speed 10x baseline algorithm and 100x human

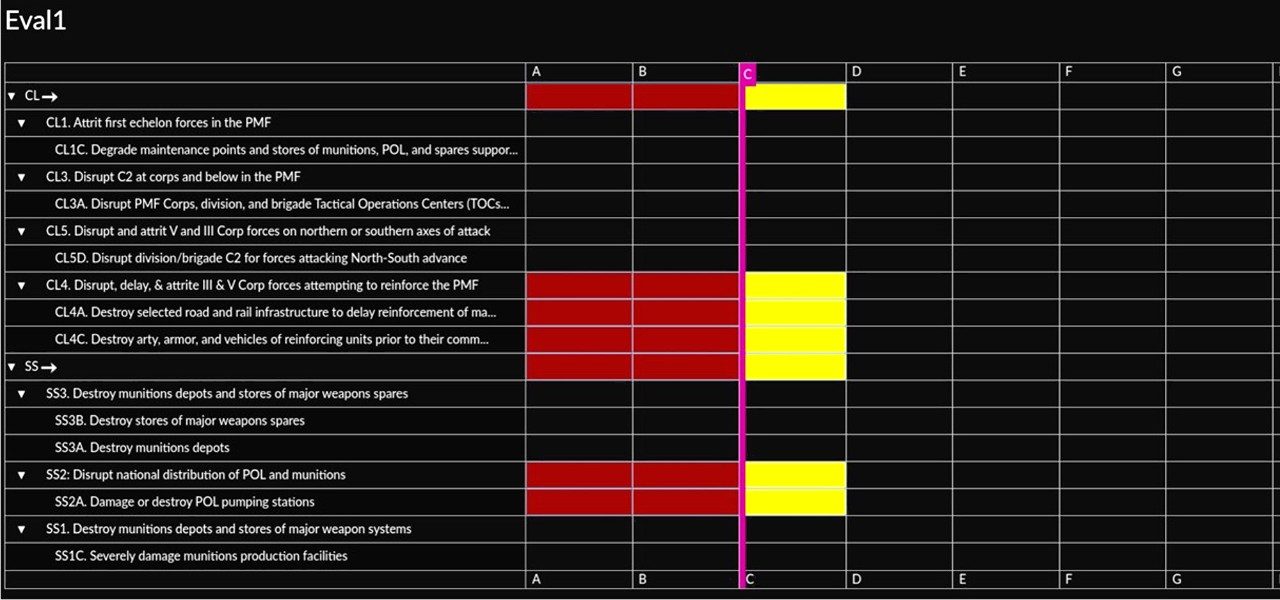

Continuously re-aligning operations (the JIPTL) with objectives (MoEs and MOPs) based on campaign evaluation reports

Recommend updates to daily target lists to ensure achievement of overall campaign objectives

DRACOLA’s VALUE TO OPERATIONS:

Accelerates the analysis of how well (or poorly) a multi-day plan is going

Enables planners to more effectively adjust operations earlier in the planning process to outpace the adversary’s OODA loop

Allows for continuous updates to plans over time as battle damage assessment data becomes available

Accounts for incomplete BDA making inferences to inform current and future days target effectiveness

Provides planners reattack recommendations and forecasted JIPTLs to enable speed and scale of decision-making

TECHNICAL APPROACH:

Dynamic Temporal Graph Embedding:

A graph represents the dependencies between targets and tactical tasks specified in the strategy-to-task hierarchy. Temporal Graph Embedding via a Graph Neural Network (GNN) autoencoder network compactly represents key features of the graph and campaign progress against Measures of Effectiveness (MoE) and Measures of Performance (MoP).

Deep Reinforcement Learning:

Deep Reinforcement Learning (DRL) learns the decision-making process without meticulously crafted heuristics. Generalization over training cases enables DRL inference techniques to handle incomplete information, uncertainty, and ambiguity in data.

Deep Neural Network Policy Gradient Method:

Given the embedded graph and using DRL, the policy network learns to add and remove targets to/from future day JIPTLs to align with commander’s guidance and objectives towards achieving MOEs and MOPs.

Rewards:

Rewards used to learn include progress towards Measures of Effectiveness (MoE) and Measures of Performance (MoP), target priorities, and minimal perturbation to existing plans.

Training Data:

DRL requires sufficient data to learn policies to make inferences to account for incomplete data. We use a set of scenarios that subject matter experts have built and then automatically augment these scenarios to generate sufficient size training data for DRL.

Operational Assessment View

Combat Assessment View

Contact Information

We're engineering a better tomorrow.

Your individual skills play a critical role in changing the way the world works and helping us develop products that make it a safer place to achieve your goals. Our teams are made up of diverse employees from a wide range of disciplines and backgrounds, working together to tackle complex challenges and push the boundaries of innovation.

Explore our skill areas to find the right opportunity for you.